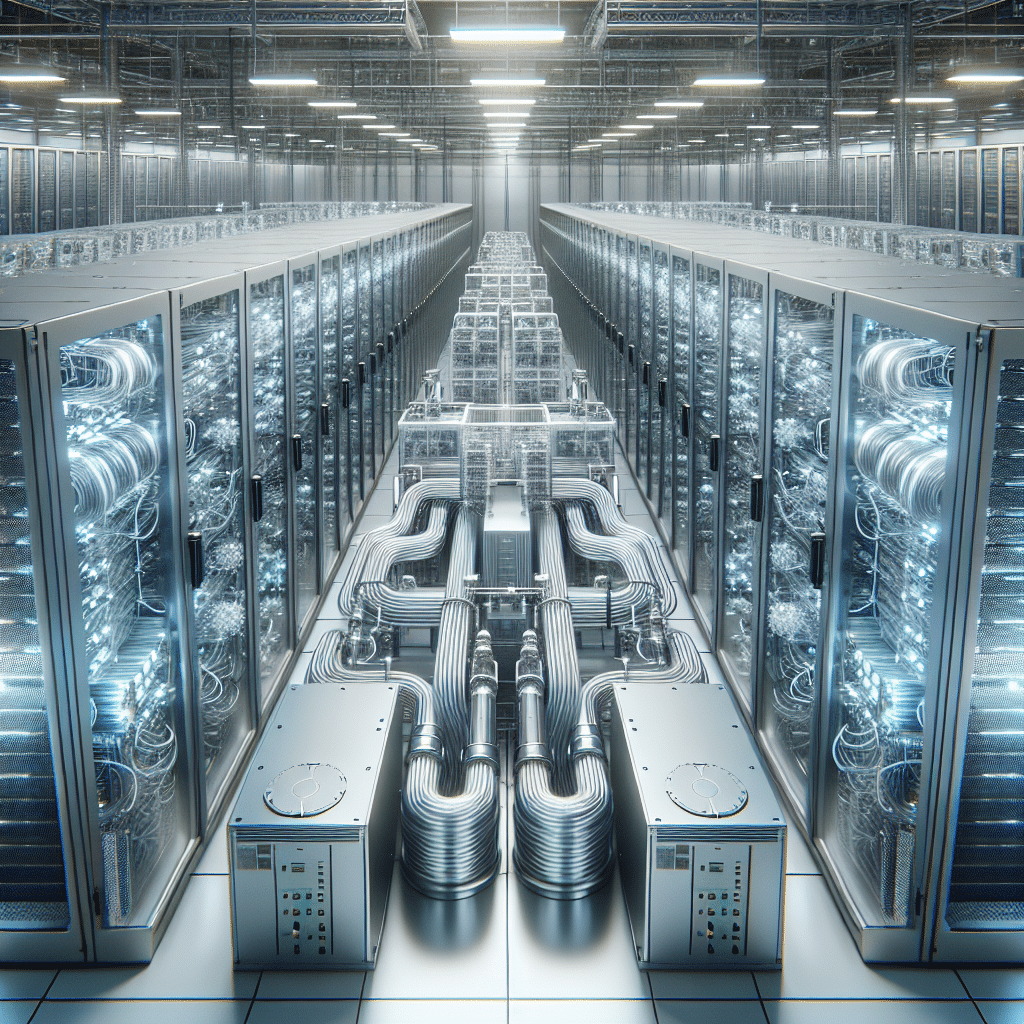

The data center industry has officially entered a new era. According to Dell’Oro Group’s latest research, the worldwide data center liquid cooling market is projected to reach approximately $7 billion in manufacturer revenue by 2029—more than doubling from an estimated $3 billion in 2025. But this isn’t just about market growth. It signals a fundamental shift in how we build and operate high-density computing infrastructure.

For years, liquid cooling was viewed as a premium option—something hyperscalers experimented with while most operators stuck with traditional air cooling. That calculus has changed dramatically. As AI chips become more powerful and rack densities climb from 10-15 kW to 80-120+ kW, air cooling simply cannot dissipate heat fast enough to keep equipment running safely.

JLL’s 2026 global data center outlook projects that average rack density will triple to 45 kW, with 80% liquid cooling adoption expected for new facilities. Steve Carlini, vice president of data centers and innovation at Schneider Electric, captures the shift well: data centers are moving from simply powering AI to becoming “AI factories”—infrastructure built to continuously train, fine-tune, and infer at scale.

NVIDIA’s next-generation Vera Rubin architecture requires 100% liquid cooling. There is no air-cooled option. For operators planning GPU deployments in 2026 and beyond, liquid cooling infrastructure isn’t a future consideration—it’s a present-day requirement.

While the headlines focus on the cooling hardware itself—direct-to-chip coldplates, coolant distribution units (CDUs), and immersion tanks—the real challenge often lies beneath the surface.

Power infrastructure represents a significant and frequently underestimated cost. In high-density AI sites, power supply units and power distribution units can cost two to three times more than traditional configurations. The electrical ecosystem must scale alongside the thermal management system, or bottlenecks emerge that limit overall capacity.

Supply chain concentration adds another layer of risk. Quick disconnect couplings (QDCs)—the specialized fittings essential to liquid cooling systems—remain concentrated among a handful of Western suppliers. While alternative manufacturers are emerging, certification requirements create barriers that slow diversification efforts.

The integration challenge also extends to facilities teams. Operating liquid cooling systems requires different skill sets than traditional HVAC management. Organizations must invest in training and potentially restructure operations teams to handle the new thermal architecture.

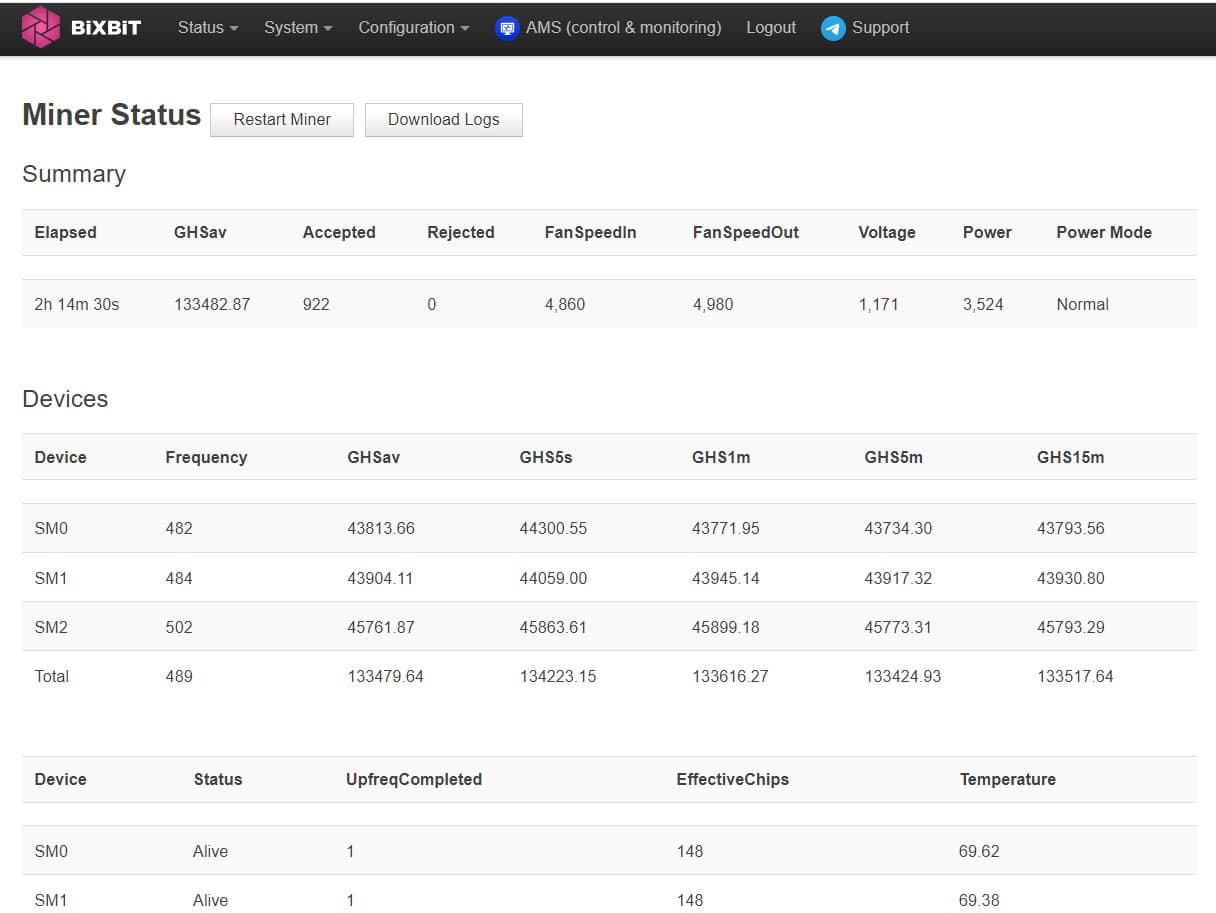

Perhaps the most underappreciated development in 2026 is the rise of thermal intelligence. Modern liquid cooling loops are now embedded with dozens of sensors monitoring flow rate, pressure differentials, inlet/outlet temperatures, and coolant chemistry. This data feeds into AI-driven data center infrastructure management (DCIM) platforms, enabling capabilities that weren’t possible with air-cooled facilities.

Predictive maintenance can now identify pump wear or micro-leaks weeks before they become problems. Dynamic workload migration allows operators to route compute jobs around emerging thermal hotspots. Real-time adjustments to AI training workloads can be made based on available thermal headroom, optimizing both performance and equipment longevity.

For data center operators, colocation providers, and enterprises planning high-density deployments, several factors deserve immediate attention.

The $7 billion liquid cooling market isn’t just a number—it represents a fundamental retooling of data center infrastructure. Organizations that approach this transition strategically, with integrated power and cooling planning, will be positioned to deploy next-generation AI workloads while others wait for equipment and scramble to retrofit facilities.

The question isn’t whether liquid cooling will become standard. It already has. The question is whether your infrastructure roadmap reflects that reality.

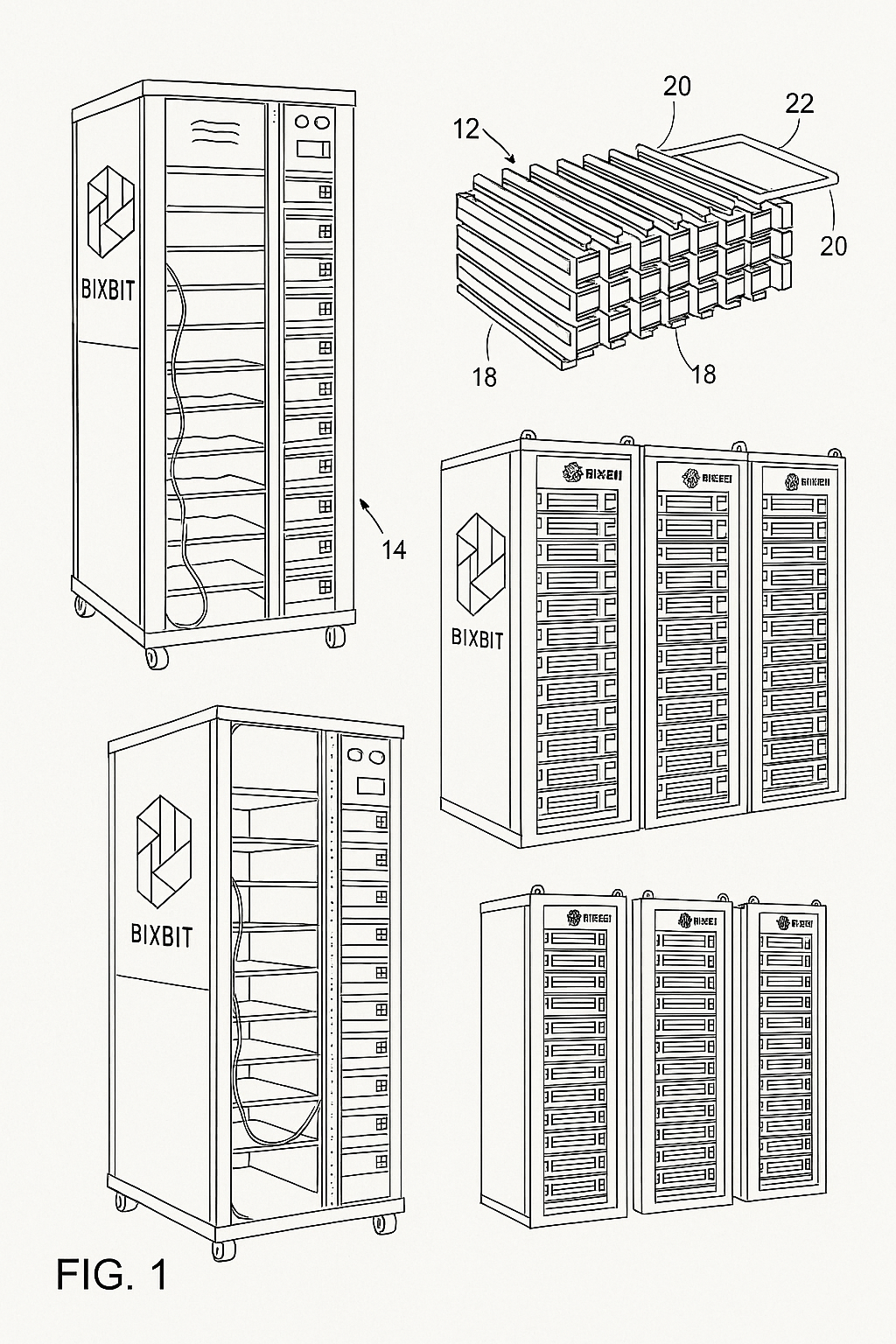

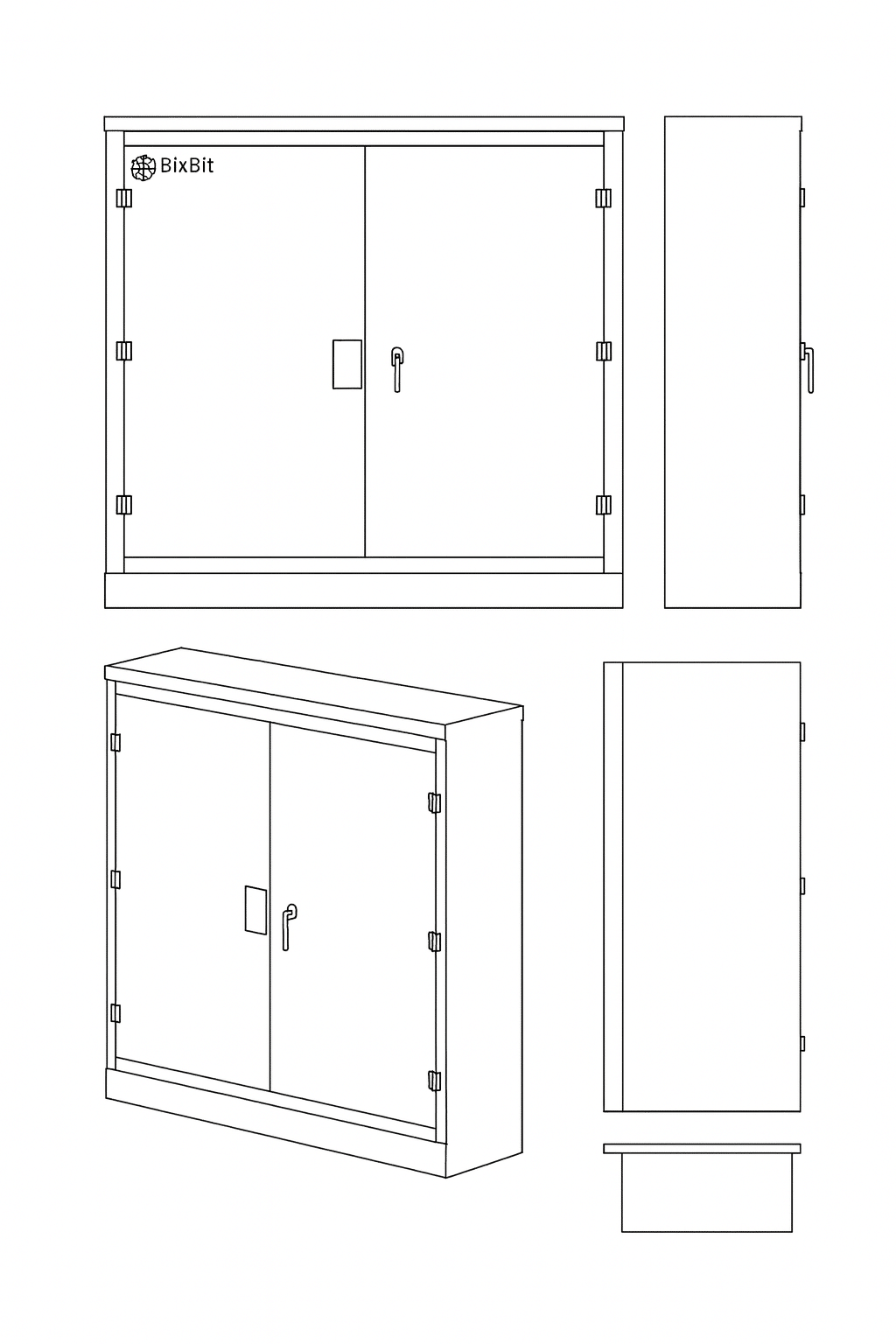

BixBit USA delivers custom-built power and cooling systems engineered for AI data centers, Bitcoin mining operations, and high-performance computing facilities. Our hydro racks and cooling distribution units are designed for the high-density deployments that define modern compute infrastructure.